How We Test Our 3D Modelling Agent

For months, it's been bothering me: how do I really know if changes to my 3D modelling agent are making it better? Evals (evaluations) are the answer, but building the right kind of eval system for a complex AI agent isn't straightforward.

Evals, for me, are repeatable experiments over the whole agent. Each one uses a prompt set and a specific configuration, runs the workflow end to end, and produces scores I can compare across runs. Runs in result in artefacts (code, preview, 3MF) and the metrics that matter, so a change either wins or loses without blindly guessing.

Because they're standardised and easy to run, I started to lean on them heavily. At the time of writing the suite has produced 1050 generations across 29 runs for $186.26 total (about $6.42 per run). Wall time: 2664m 13s in total, roughly 91m 52s per run. The scale isn't huge, but it's enough signal to steer day to day iteration.

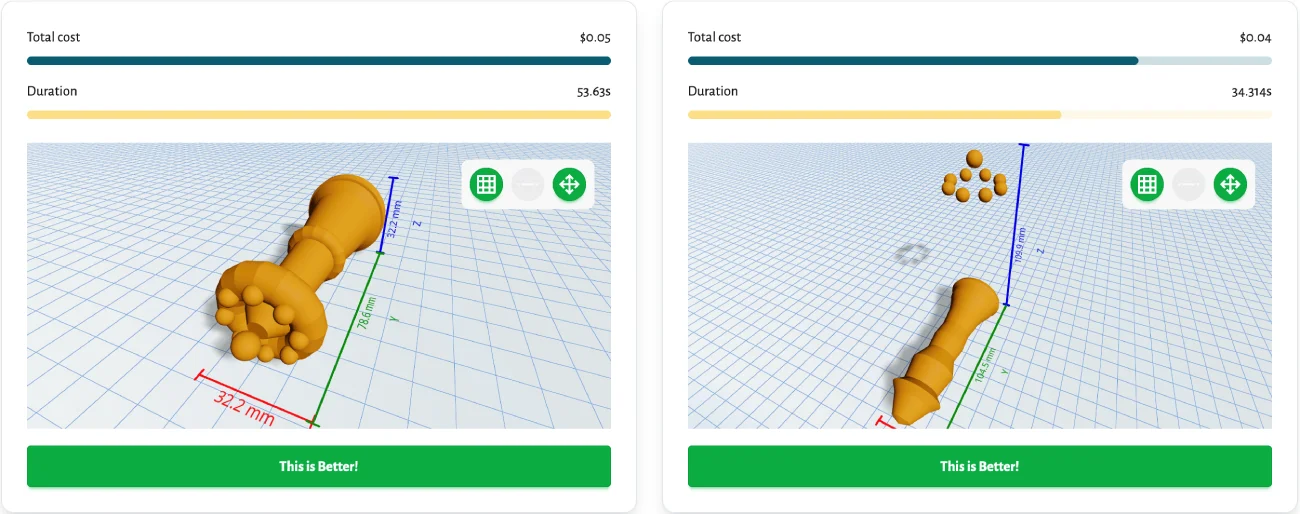

I first tried a simple "battleground" where I could compare two different models side-by-side. It was a start, but it quickly fell apart. My agent isn't just a single LLM call. It's a multi-step workflow. What if I want to use a faster, cheaper model just for repairing generated code? What if I tweak the system prompt? The battleground was too rigid.

Existing eval frameworks didn't fit either. Most are designed for a simple call -> response flow. They also focus on generic metrics like "hallucinations." Frankly, I don't care about hallucinations. I care about whether the agent produces a great, printable 3D model. This meant I needed a custom solution.

The Problem: Evaluating a Complex Agent

So, the core problem was building a flexible evaluation system that could:

- Test the entire agent workflow, not just a single model.

- Accommodate any change, from a different model to a tiny prompt tweak.

- Use custom metrics that actually matter for 3D modelling.

The Solution: Git-Based Evals and Weighted Scoring

After a lot of thought, I landed on a solution: the git commit is the single source of truth.

Any change I want to test—a new model, a different prompt, a refactored workflow—gets committed. I can then run an eval against that specific commit. This encapsulates the entire state of the agent and its configuration. Simple and powerful.

But what are we actually measuring? For generating 3D models, I defined a few key metrics:

- Prompt Adherence: Does the model do what I asked? (Subjective)

- Aesthetics: Does the model look good? (Subjective)

- Duration: How long did it take to generate? (Objective)

- Cost: How much did it cost? (Objective)

- Printability: Is the model manifold? Any other printing issues? (Objective, work in progress)

The first two are subjective. To handle this, I use a two-pronged approach:

- Automatic AI Evals: Another AI model scores adherence and aesthetics.

- Human Evals: I built a simple dashboard to rate them myself.

To keep my own ratings consistent, I created a clear scale. I think this is super important, because six months from now, I'll have forgotten what a "0.3" in aesthetics meant.

Adherence Scale

- <0.2 (Poor): Misses core intent; largely irrelevant or incorrect.

- <0.4 (Weak): Partially relevant; significant omissions or errors.

- <0.6 (Fair): Covers main points but lacks completeness or precision.

- <0.8 (Good): Mostly accurate; minor gaps or deviations.

- <=1.0 (Excellent): Fully aligned; precise, comprehensive, and faithful to intent.

Aesthetics Scale

- <0.2 (Poor): Visually unpleasant or chaotic.

- <0.4 (Mediocre): Dull, imbalanced, or unrefined.

- <0.6 (Decent): Acceptable; some appeal but lacks polish.

- <0.8 (Good): Cohesive, attractive, visually satisfying.

- <=1.0 (Excellent): Striking, elegant, emotionally engaging.

Weighted Scoring

Okay, so now I have all these metrics. How do I combine them into a single score to tell me if a change is good or bad? With a weighted equation.

This lets me tune what I care about most. For example, I could make human-rated adherence the most important factor and speed secondary, ignoring everything else.

Or I could make cost twice as important as speed.

Here’s my current setup for making decisions:

My priorities are:

- Prompt Adherence: The most important thing is that the agent actually makes what you ask for.

- Speed: I've had configurations where generation times exploded to 20 minutes. That's not a great user experience.

- Cost: Generations are already pricey. To offer reasonable plans, I need to keep costs under control.

- Aesthetics: Least important right now while adherence is still a challenge. Function over form, for now. A beautiful box that you can't open isn't very useful.

I also weigh my own scores much more heavily than the AI's scores.

One hard-earned lesson from rating 1000+ models: my own scoring drifts. Even with the rubric on screen I often ended up doing a quick pass/fail at the 0.4 threshold (would I be okay showing this to a user or not). That pushed me towards a simpler human signal. I am experimenting with a binary yes/no for adherence, then letting the weighted score use that as a strong feature. It reduces noise, speeds reviews, and matches how I actually make decisions under time pressure. The AI can keep a continuous score; my rating can be crisp and auditable.

Putting it to the Test

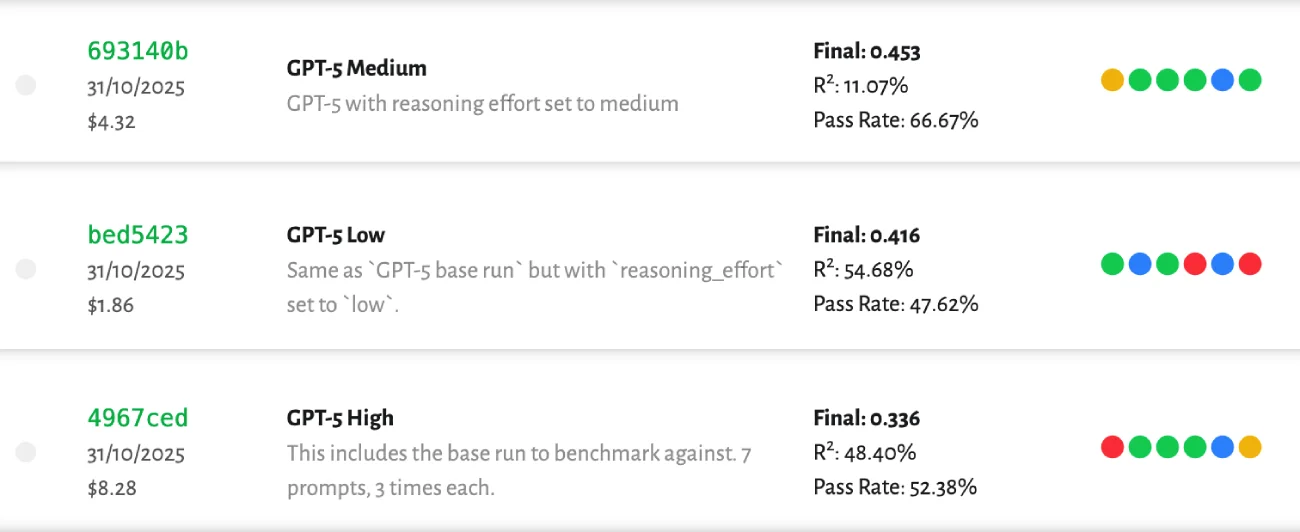

Let's see it in action. I recently changed the agent's "reasoning effort" to "high," but I wasn't sure it was actually helping. It was definitely slower and more expensive. Could I get away with "medium" or "low" effort and cut generation time in half?

I have two sets of prompts I use for evals: a "base" set with 7 prompts and an "extra" set with 27. Each prompt is run 3 times to account for variability, so an extra run generates 81 models. I usually start with the base set to get a general idea. If the results are too close to call I run the full extra set. It's not a huge sample size, but it's way better than flying blind. It already costs about $5 per base run and about $15 per extra run, and this is a side project...

The results were clear:

- High reasoning effort costs 2x as much as Medium and 4x as much as Low.

- There was no noticeable difference in quality between High and Medium.

- Low performed slightly worse than the other two.

- High took 3.6x longer than Low and about 2x longer than Medium.

The winner was obvious: Medium reasoning effort. It provided the same quality as High at half the cost and time. You can see all the results on the evals page.

Since then GPT-5.1 dropped (18.11.2025). Early runs on the same "medium" reasoning setting show 2-3x faster completions than GPT-5 with slightly lower quality on adherence and aesthetics. On overall capability it sits between Gemini 2.5 Pro and GPT-5 for my workload. Given the speed, I now prefer GPT-5.1 for model generation when I need fast iteration, and fall back to GPT-5 when that last bit of quality matters. Both stay in the harness so the scoreboard decides, not vibes.

I'm constantly tweaking variables like these and re-running evals:

const INITIAL_MODEL = "openai/gpt-5";

const INITIAL_REASONING_EFFORT = "medium";

const CODE_ERRORS_MAX = 4;

const FIX_MODEL = "openai/gpt-5-mini";

const FIX_REASONING_EFFORT = "low";

const VISUAL_ADHERENCE_MIN = 0.4;

const VISUAL_ERRORS_MAX = 1;

const VISUAL_EVALUATION_MODEL = "2.5-flash";

const VISUAL_FIX_MODEL = "openai/gpt-5";

const VISUAL_FIX_REASONING_EFFORT = "low";

The Eval Rabbit Hole Goes Deeper

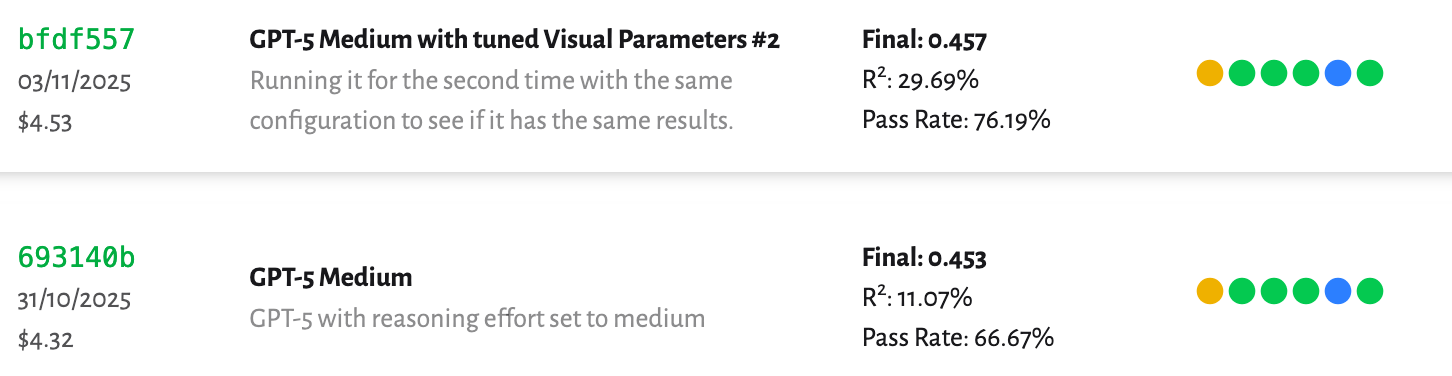

You might be wondering about those VISUAL_ variables. This is where things get interesting. I implemented a "visual check" feature where an AI model looks at a rendered image of the 3D model and scores its prompt adherence. If the score is too low, the agent tries again.

My gut feeling was that it wasn't helping enough to justify the extra time and cost. It was making generations take three times longer. Running the extra prompt set confirmed this suspicion. The visual checks weren't consistent enough. Why? Was the AI's rating just random? Was my threshold too low, causing unnecessary retries?

A Use Case for Linear Regression

I'd heard of linear regression but never had a real use for it. Until now. I have pairs of scores for the same model: (AI score, human score). This is a perfect fit.

In simple terms, linear regression finds the straight line that best fits a set of data points. In my case, the data points are the (AI score, human score) pairs. If the AI is a good judge, its scores should have a linear relationship with my scores. For example, when the AI gives a 0.2, I might consistently give a 0.3. When it gives a 0.8, I might give a 0.7. Linear regression finds the formula for that line.

This is useful for two reasons. First, it lets me train a tiny model to predict my score based on the AI's score. More importantly, it gives me a value called R-squared (R²). This tells me how well the AI scores predict the human scores. An R² of 1 means a perfect prediction; 0 means no correlation at all. I'd be happy with anything over 0.4.

What I found:

- The best model for predicting my scores was

2.5-flash. - There wasn't much difference between flash/pro or GPT-5/GPT-5-mini. Nano was noticeably worse.

After tuning the visual checks with this data, I ran the benchmarks again.

The initial results showed some improvement in the "pass rate" - the number of models with an adherence score above 0.4. But when I dug deeper with the extra prompt set, the R² was actually closer to 0.1 (or 10%). That's... not great. It means the AI's rating is a poor predictor of my own.

So for now, I've disabled the visual checks. It's not just a matter of waiting for the underlying vision models to get better. There are other things I need to explore, like improving the system prompt for the visual check LLM or rendering the model from multiple angles to give the AI a better view. The good news is, I have the entire system ready to go, so I can easily test these ideas and flip the switch when the checks become reliable enough.

Caveats

This system isn't perfect, of course. The test dataset is small, so the results aren't statistically bulletproof, but it's a great start. The human evaluation is also just me and sometimes my girlfriend, so there's personal bias. Finally, the AI evaluation is still a work in progress; an R² of 0.1 shows there's a long way to go.

One more caveat: not all historical evals were configured identically. Early on I discovered a few implementation mistakes and some runs used slightly different accounting, so certain costs or timings are off. The /evals page is still useful, but treat older entries as indicative rather than precise. I'm keeping them for posterity and will start tagging them as "Legacy" as the framework stabilises so comparisons stay honest.

Conclusion

This evaluation system has been critical for making informed decisions. It replaces guesswork with a data-driven approach, which is essential when dealing with so many variables.

I can now systematically test hypotheses and quantify the impact of changes:

- What's the best LLM for prompt adherence?

- Which model generates the most aesthetic results?

- How do changes to the system prompt affect the performance vs. cost trade-off?

- Is it better to use one powerful model for everything, or smaller, specialized models for tasks like code repair?

- Do visual checks actually improve the final output, or just increase cost and latency? (Spoiler: not yet.)

The framework allows for methodical, iterative improvement. For a solo developer building a complex system, having a robust testing harness like this isn't just a nice-to-have, it's a must.