Comparing State of the Art LLMs for 3D Generation

It's been a wild few months for LLMs. We've seen GPT-5 (August 7), GPT-5.1 (November 12), and most recently Gemini 3 (November 19).

For GrandpaCAD, my text-to-3D tool, this matters. I don't care about benchmarks on poetry or halucinations. I care about one thing: can it write code that generates a printable 3D object?

I ran 84 generations for each model to find out.

To give you an idea of the scale: I burned through 6 million tokens on Gemini 3 in the first 24 hours of its release alone. This isn't a quick "vibes" check. I think I have a fairly data driven approach to this.

The Setup

I used my standard evaluation harness. That means 27 unique prompts, ranging from a simple "low poly tree" to a complex "dragon printable without supports". Each prompt is run 3 times to smooth out the variance.

This framework has now tracked 29 full evaluation runs, producing 1,050 3D models in total. I've logged 2,664 minutes (over 44 hours) of generation time and spent $186.26 on API costs to build this dataset.

You can see the raw data on the /evals page. Note that the evals page is a living document, so historical runs might not match perfectly if I've tweaked the harness since then.

The Data

Here is how they stack up.

| Metric | Gemini 3 | GPT-5 | GPT-5.1 |

|---|---|---|---|

| Weighted Score | 0.555 | 0.501 | 0.467 |

| Pass Rate | 79.76% | 80.95% | 67.86% |

| Adherence | 0.57 | 0.54 | 0.46 |

| Avg Cost | $0.14 | $0.18 | $0.26 |

| Avg Time | 1m 24s | 3m 26s | 1m 12s |

| Total Cost (84 runs) | $12.05 | $15.40 | $22.13 |

Gemini 3 is the clear winner for my workload. It has the highest weighted score (0.555) and the highest prompt adherence (0.57). Crucially, it's also the cheapest ($12.05 total) and very fast.

GPT-5.1 was a surprise. It's incredibly fast (1m 12s average), but the cost ($22.13 total) is inflated because I ran this benchmark with OpenAI's service_tier: priority. This setting doubles the price for a modest 20-30% speed boost on this model. Even without priority, it's fast, but the quality took a hit with a pass rate of only 67.86%. For now, the speed isn't worth the drop in reliability.

GPT-5 remains a solid, if slow, option. It actually had the highest pass rate (80.95%), but it takes over 3 minutes per generation on average (running without priority tier).

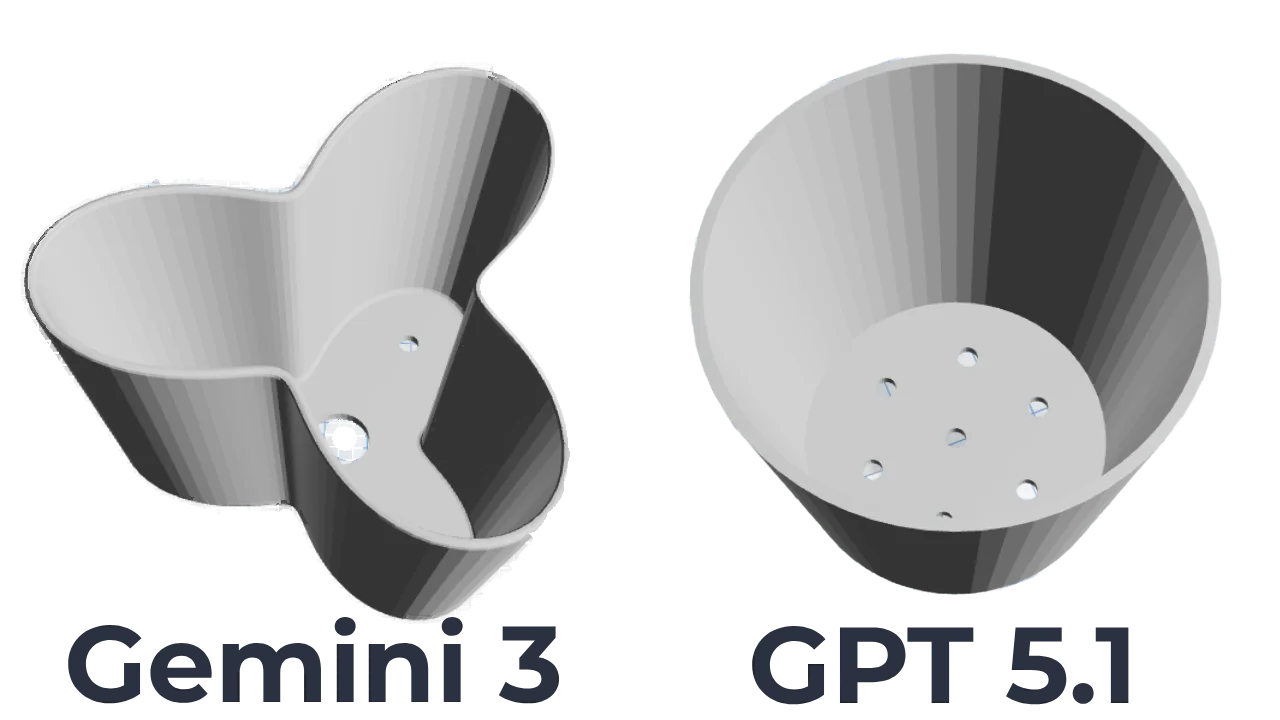

Gemini 3: Creativity + Intelligence

The numbers don't tell the whole story. Gemini 3 feels different. It's quirky.

When I asked previous models to make a "stackable 3D pot", I usually got a cylinder with a lip. Functional, but boring. Gemini 3 made something genuinely creative.

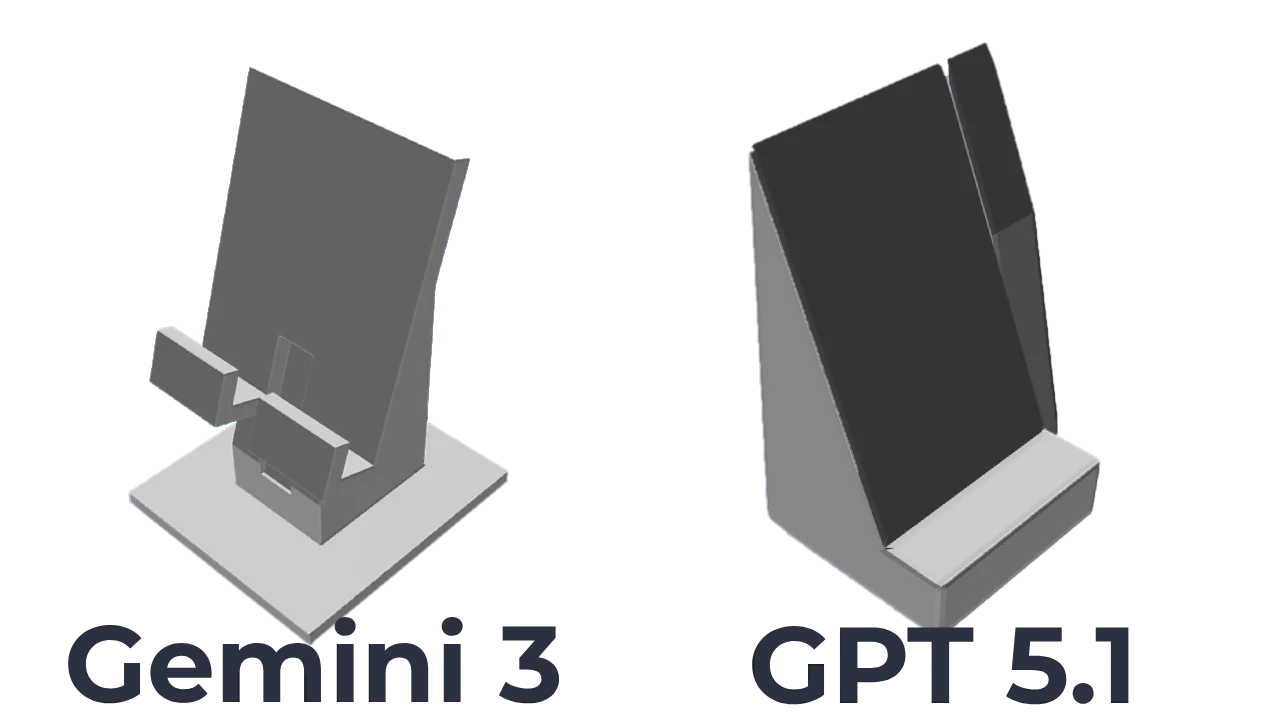

It also has a much better sense of spatial reasoning. I often test models with: "Make a simple smartphone stand that can hold a phone vertically and horizontally."

Most models struggle to position the slot or the backrest correctly relative to the phone's center of gravity. Gemini 3 nailed the relative scale and positioning immediately. Even though the eval runs didn't produce the best results I've seen better results that I have ever seen with any other model before.

My girlfriend, who has been patiently testing these generations with me (and usually getting frustrated), actually noticed the difference.

"Vito look at that, it did such a good job!"

That's the first time she's been excited by an improvement. For this project, function comes first. Aesthetics are a nice-to-have, but if the part doesn't fit or print, it's useless. Gemini 3 seems to understand the nuance of the request better.

Visual Comparison

Here is a grid of some of the results across the models.

Click on the image to inspect the model

It's not all perfect. And there are many failures across all the models.

Verdict

I'm switching the default model to Gemini 3. The combination of high adherence, low cost, and "common sense" spatial reasoning makes it the best choice for 3D generation right now.

Do you want to see more future benchmarks related to 3D generation?

Do you want to try 3D Model generations?